Jacky

教土豆学计算机

Broker

Cluster Membership

When first configure a broker, we would give it a unique broker.id in the configuration file.

Kafka uses Apache Zookeeper to maintain the list of brokers that are currently members of the cluster. Every time a broker process starts, it registers itself with its unique id in ZooKeeper by creating an ephemeral node.

Different Kafka components subscribe to the /brokers/ids path in ZooKeeper where brokers are registered, so they get notified when brokers are added.

Controller

The controller is one of the Kafka brokers that, in addition to the usual broker functionality, is responsible for electing partition leaders and other admin related tasks.

The first broker that starts in the cluster becomes the controller by creating an ephemeral node in ZooKeeper called /controller.

Replication

Kafka replication 的实现主要参考了 Microsoft 的 PacificA

The unit of replication is topic partition. Under non-failure conditions, each partition in Kafka has a single leader and zero or more followers. The total number of replicas including the leader constitute the replication factor.

For each Kafka node, liveness has two conditions

-

A node must be able to maintain its session with ZooKeeper (via ZK’s heartbeat mechanism)

-

If it is a slave it must replicate the writes happening on the leader and not fall “too far” behind

We refer to nodes satisfying these two conditions as being in sync to avoid the vagueness of alive or failed.

The leader keeps track of the set of in sync nodes. If a follower dies, gets stuck, or falls behind, the leader will remove it from the list of in sync replicas. The determination of stuck and lagging replicas is controlled by the replica.lag.time.max.ms configuration.

Replica Assignment

There are 3 goals of replica management

-

Spread the replicas evenly among brokers

-

For partitions assigned to a particular broker, their other replicas are spread over the other brokers

-

If all brokers have rack information, assign the replicas for each partition to different racks if possible

Log Compaction

…

Further Readings

-

PacificA: Replication in Log-Based Distributed Storage Systems

-

kafka Detailed Replication Design V3

内容有些过时

-

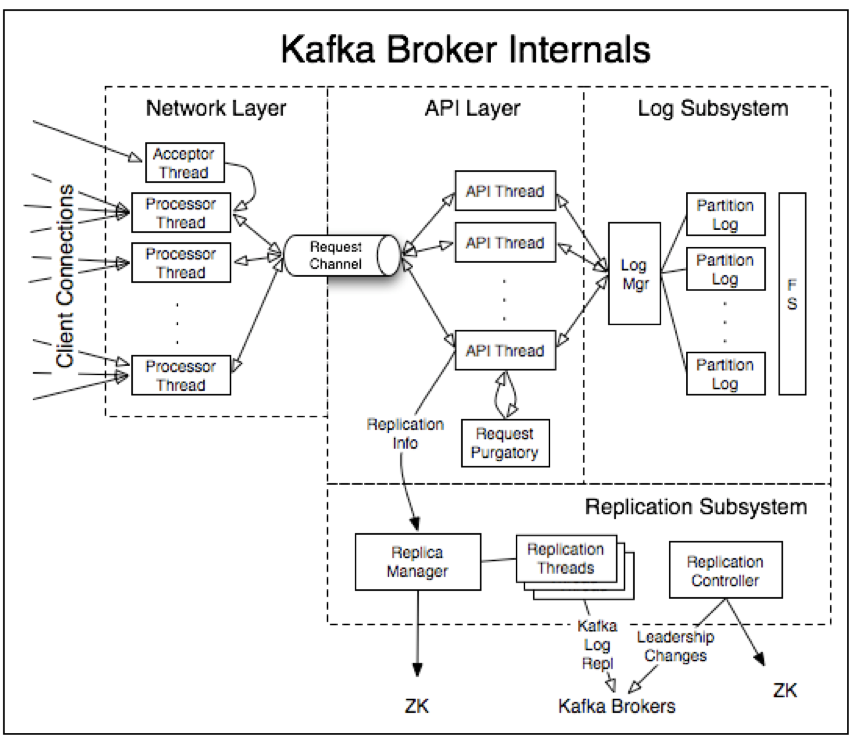

The request purgatory is a holding pen for requests waiting to be satisfied (Delayed).

来源

来源